Project Description

Farmers are under growing pressure to increase production to feed a growing population, while at the same time considering environmental impact and constraints on land, water and labour. The increasingly larger farm machines cause significant soil compaction damage and are a single point of failure. Hence, in this project we developed the technology for small, inexpensive, cooperative robots for agriculture. This project was comprised of five themes:

- vision-based obstacle detection,

- vision-based localisation,

- docking for refill,

- multi-robot coordination,

- and navigation.

This project was a Linkage project by the Australian Research Council Linkage awarded to Queensland University of Technology, the Australian Centre for Field Robotics and industry partner SwarmFarm Robotics.

The AgBot robot platform. We converted a utility vehicle into an autonomous robot. Thank you to Ray Russell from RoPro Design for help with the conversion kit.

I managed the engineering and research program for this project. In 2013 we received a “QUT Vice Chancellor Team Performance Award” for our team work on this project. Many of the outcomes were because of the amazing and dedicated work of Andrew English and Patrick Ross.

In the project we spent three months testing the robot on a farm near Emerald. A big thank you to Andrew and Jocie Bate for their kind and generous hospitality while we were on their farm. Their company, SwarmFarm Robotics, is commercialising the technology. Check them out!

During this project we had the opportunity to perform a demonstration for the Queensland Premier, Campbell Newman. This played a part in leading to funding for the “Strategic Investment in Farm Robotics” project.

Docking for refill

To relieve the farmer from being required to frequently replenish robots with consumables such as herbicide, fuel and seed we designed a docking system. This will allow persistent, long-term operation of numerous autonomous robots. In this project we demonstrated how to refill liquid from a dock using the AgBot robot. The key problem is to accurately guide the robot into the dock. GPS by itself isn’t accurate enough to rely on solely for docking. We demonstrated two techniques for accurate docking:

- The robot’s cameras to detect AR markers and then to use this information to determine the robot’s location with respect to the dock.

- A two revolute link robot arm that could position the docks nozzle over the robot’s spray tank opening.

You can see these in action in the video below.

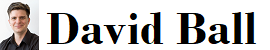

Vision-based obstacle detection

Reliable obstacle detection in the field is challenging due to the complex unstructured nature of the environment combined with a large variety of obstacles and lighting conditions. In an agricultural domain typical obstacles include vehicles, people, animals and equipment in dirt and grass terrain.

This project, led by Patrick Ross, designed a vision-based technique which combines novelty in appearance and structure descriptors to detect obstacles. It requires no prior knowledge of the obstacles, and adapts quickly to environmental changes making it suitable for a large variety of environments. We have demonstrated this system working during the day and night on a wide variety of challenging obstacles.

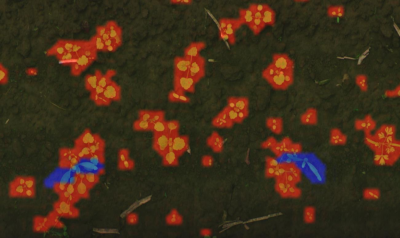

Vision-based localisation

It is common for large agricultural vehicles to steer along pre-planned paths using precise Global Navigation Satellite System (GNSS) receivers. However, high-precision GNNS steering systems remain expensive, require correction data as well as an extremely precise map of crop row locations.

This project, led by Andrew English, designed vision-based methods of guiding autonomous robots along crop rows. In particular, the algorithms work in very visually challenging environments without any pre training. The row tracking signal is fused in a particle filter with odometry, inertial data, and an inexpensive GPS.

Media and News

This project was in the news.

Robots clear weeds at central Queensland farm – ABC News

Farm robots roll onto paddocks – ABC Rural

State Government Media Release

Weed killing robot trials in Central Highlands a world first

Qld factors farm robots into the near future

Publications

D. Ball, P. Ross, A. English, T. Patten, B. Upcroft, R. Fitch, S. Sukkarieh, G. Wyeth, P Corke, (2013) “Robotics for Zero Tillage Agriculture”, International Conference on Field and Service Robotics (FSR), Brisbane, Australia.

A. English, P. Ross, D. Ball, B. Upcroft, P. Corke, (2015) “TriggerSync: A Time Synchronisation Tool for ROS”, IEEE International Conference on Robotics and Automation (ICRA), Seattle, USA

P. Ross, A. English, D. Ball, B. Upcroft, P. Corke (2015) “Online novelty-based visual obstacle detection for field robotics”, IEEE International Conference on Robotics and Automation (ICRA). Seattle, USA

P. Ross, A. English, D. Ball, P. Corke (2014) “A method to quantify a descriptor’s illumination variance”, Australasian Conference on Robotics and Automation (ACRA), Melbourne, Australia

P. Ross, A. English, D. Ball, B. Upcroft, G. Wyeth, P. Corke, (2014) “Novelty-based visual obstacle detection in agriculture”, IEEE International Conference on Robotics and Automation (ICRA),Hong Kong, China.

A. English, P. Ross, D. Ball, P. Corke, (2014) “Vision Based Guidance for Robot Navigation in Agriculture”, IEEE International Conference on Robotics and Automation, Hong Kong, China.

P. Ross, A. English, D. Ball, B. Upcroft, G. Wyeth, P. Corke, (2013) “A novel method for analysing lighting variance”, Australasian Conference on Robotics and Automation (ACRA), Sydney, Australia.

A. English, P. Ross, D. Ball, B. Upcroft, G. Wyeth, P. Corke. (2013) “Low Cost Localisation for Agricultural Robotics”, Australasian Conference on Robotics and Automation (ACRA), Sydney, Australia.

Invited presentations

Invited presentation about Agricultural Robotics at SAGA in 2014

Invited presentation at AusVeg in 2013.